Welcome to the homepage for Numerical Analysis II (Math 4510/6510)! I will post all the homework assignments for the course on this page. Our texts for the course are Cheney and Kincaid, Numerical Mathematics and Computing (7th edition), and Demmel, Applied Numerical Linear Algebra.

In this class, we will learn two fundamental topics in numerical mathematics: solving differential equations and numerical linear algebra. Mathematica will be an integral part of the course. UGA now has a site license for Mathematica and you can get a copy for your laptop or home computer free of charge. I encourage you to work through some introductory material on Mathematica (the book ‘Mathematica deMYSTiFieD’ is actually pretty good) in order to get grounded. Virtually all of the homework assignments and projects will require you to write short Mathematica programs.

Lecture Notes and Homework Online

Here are links to my lecture notes for the course; these will be posted as I write them. Each lecture is usually accompanied by one or more Mathematica notebooks explaining and demonstrating the concepts from class. These are Mathematica 7 notebooks.

- Lecture 1. Introduction to Numerical Solution of Ordinary Differential Equations.

- Heat Transfer ODE (Mathematica Demo).

- Families of Solutions of ODEs (Mathematica Demo).

- High Altitude Free Fall paper.

- The Taylor Method for Solving ODE (Mathematica Demo).

- Lecture 2. Runge-Kutta methods for solving ODE.

- Runge-Kutta Methods (Mathematica Demo)

- Lecture 3. Error Analysis for ODE solvers and Adaptive Runge-Kutta-Fehlberg methods

- Lecture 4. Predictor-Corrector (Adams-Bashforth-Moulton) solvers for ODEs

- Runge-Kutta Methods vs Predictor-Corrector Methods (Mathematica Demo)

- Lecture 5. Systems of ODEs

- Runge-Kutta Methods for Systems of ODE (Mathematica Demo)

- Lecture 6. Boundary Value Problems (I)

- Lecture 7. Boundary Value Problems (II) (Now downloads correct file! Beware of a collection of small typos in this set of notes.)

- Finite Element Solver for Linear BVP (Mathematica Demo)

- Lecture 8. Introduction to PDE (I)

- Lecture 9. Introduction to PDE (II)

- Lecture 10. Grid Methods for Elliptic Boundary Value Problems (Laplace/Poisson Problems)

- Lecture 11. Variational Methods for Elliptic Boundary Value Problems (Laplace/Poisson Problems)

- Lecture 12. Numerical Linear Algebra. Introduction and Error Analysis. Condition Number. Backward Stability.

- Lecture 12a. Matrices and Matrix norms.

- Lecture 12b. Introduction to Perturbation Theory.

- Lecture 13. Perturbation Theory II.

- Lecture 14. Gaussian Elimination

- Lecture 15. Gaussian Elimination II- Algorithm for LU Decomposition

- Lecture 16. Error Analysis for LU Decomposition

- Lecture 17. Estimating Condition Numbers

- Lecture 18. Iterative Refinement of Solutions to Ax = b

- Lecture 19. The Cholesky Decomposition and Symmetric Positive Definite Matrices

- Lecture 20. Matrix Ordering and Sparsity of LU Decomposition of Sparse Matrices (Mathematica)

- Lecture 21. Linear Least-Squares Problems. Normal Equations, QR Decomposition, and Singular Value Decomposition

- Lecture 22. Condition Number for Least-Squares Problems

- Lecture 23. QR Decomposition in Practice. Householder transformations

- Lecture 24. Iterative Methods in Numerical Linear Algebra (basics)

- Lecture 25. Various Splittings for Iterative Methods

- Lecture 26. Convergence of Iterative Methods

- Lecture 27. Chebyshev Acceleration

Homework Assignments.

- Homework 1. Due 1/30/2014.

- Homework 2. Due 2/13/2014.

- Homework 3. Due 3/20/2014.

- Homework 4. Due 4/3/2014.

Differential Equations Project – Due 3/18/2014

- A magnetic railgun is used to launch a steel cylinder 25 cm in diameter and weighing 2 tons from a naval installation in Pearl Harbor, HI at a hostile carrier group 250 miles due west. (See YouTube video of test railgun shot .)

- Use the model for air density at different heights given in the parachute drop project, and the drag coefficient for a cylinder to develop a model for the force of air resistance on the projectile. Account for the force of gravity on the projectile using a circular model of the Earth (some solutions may orbit the Earth before striking the carrier), but the projectile, the target, and the center of the Earth will always define the same plane. Combine all this with Newton’s second law to develop a differential equation for the flight of the projectile.

- Implement a shooting method to solve your ODE in Mathematica (using the RK4 or Predictor-Corrector methods to solve the underlying ODE problems given in the shooting method) to solve for a launch speed which will cause the cylinder to land on target assuming that you are given a launch angle of \theta.

- Assuming that hostile carrier has the dimensions of the U.S.S. Nimitz, determine by experiment the permissible window of angles and speeds which will result in a hit. Bonus points will be awarded for the trajectory using the lowest initial speed. What is the kinetic energy of the projectile on impact? How does this compare to the chemical energy of an equivalent weight of TNT?

Linear Algebra Project – Due 4/24/2014

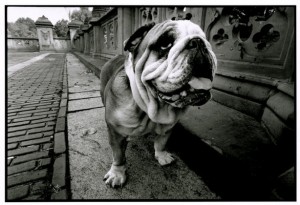

Consider this 614 x 420 pixel black and white image of a dog

Divide the upper left 608 x 416 pixels of the image into 32 x 32 pixel blocks. Convert the image data in each block into a single grayscale value between 0 and 1 in each pixel and think of the image blocks as vectors in R^{1024}.

Suppose we considered the cloud of 1024-vectors that we got from taking blocks from hundreds, or millions, of real images. Would these vectors be uniformly spread across R^{1024}? Of course not! Image data is actually quite special, and comes very close to lying in a much lower-dimensional subspace of R^{1024}. We can exploit the special structure in the data to design an image compression algorithm using linear least-squares problems.

- Detailed writeup of the problem and working sample code. In addition, here is a set of sample functions developed during the spring 2011 class by Ryan Livingston, together with Ryan’s compression matrices.

- We discovered in 2014 that using JPEG compressed images to train PCA mostly results in developing an algorithm that’s very good at reproducing JPEG artifacts and much less good at reproducing images. Accordingly, this time you should train on the Uncompressed Colour Image Database. If absolutely desperate, this is mirrored on my site, but please try the other link first. It’s about half a gig, and my web hosting service will not appreciate it if all the students in the class download this much from my page.

- A Tutorial on Principal Component Analysis. (Shlens)

- Independent Component Analysis: Algorithms and Applications. (Hyvarinen, Oja)

- ICA Cocktail Party Demonstration.

Compressed Dog Competition

When all the projects are submitted, we have a slide show day where everyone votes on the best version of each image. The overall winner wins the Math4510 Best Picture Award for the year. This very large zip folder contains the notebook used for the comparison, which presented A/B compressions of the same images to the class and then attempted to rank the submissions according to these comparisons using the HodgeRank method. Note that many of the compressed images were rather close, so what look like big differences in rankings are not big differences in students ideas or effort. Also note that while these are the rankings used to decide the awards below, they are not grades and didn’t particularly correlate with grades, either.

Previous Winners

Fred Hohman in the 50% compression category (2014). Fred used PCA on a large training set to achieve striking compression results. Here are Fred’s thoughts on his method. Here is Fred’s prizewinning 50% compression matrix.

Irma Stevens in the 90% compression category (2014). Here are Irma’s thoughts on her method. Here is Irma’s prizewinning 90% compression matrix.

Ke Ma in the 99% compression category (2014).

Ryan Livingston, judged best overall in 2011. Here are his thoughts on how he constructed the matrices, together with his test set of images .

Winning Compressions

To get a sense of how good the compressions are that are provided by the student work, here’s slideshow of the best results from 2014. For students in the class, I’m also posting uncompressed, unlabeled versions of the slideshow images for you to look for subtle details.

[SlideDeck2 id=607]

Syllabus

Please examine the course syllabus. If you think you can get by with this copy, save a tree! Don’t print it out.

Material on this page is a work-for-hire produced for the University of Georgia.